BLOG

Debunking the Top Myths of IoT Development

There’s no sugarcoating it: IoT development is a complex and challenging endeavor. And while it’s certainly not an impossible feat, myths and misconceptions about the field can make it even harder to understand what you’re up against, especially when first embarking on a new IoT project.

We’re here to set the record straight. At Very, we believe IoT is a wholly worthy endeavor to pursue, and enterprises looking to begin or add to an existing IoT product deserve clarity surrounding some of the industry’s toughest talking points. Here are four of the most common IoT development myths, debunked.

IoT Myth 1: IoT simply means connecting a device to WiFi.

An IoT device is just a thing (machine, sensor, controller) connected to the internet. By that logic, anyone can create a connected device or an IoT device by connecting it to WiFi — right?

Not quite. For starters, WiFi isn’t the only connectivity option available. IoT devices can communicate with sensors through several kinds of networks, including cellular, LoRaWAN, and low-power wireless. On top of that, IoT devices require a full software backend to manage and process the data they collect. Let’s take a look at these examples in action.

Consider that any IoT project will have several constraints, any of which could include business, technical, location, and support/deployment capabilities. These constraints will ultimately determine the kind of connectivity used in any IoT deployment.

For example, imagine deploying an IoT-enabled remote solar panel or cell tower monitoring operation. The constraints of those products would likely look something like this: BOM cost under a certain value, a two-year battery lifespan, operational in extreme temperatures, spotty cellular service, and no WiFi access. Additionally, these deployments would require little to no human involvement for as long as could be made possible.

Communication via LoRaWAN could be a viable solution in this instance — a LoRa gateway would be installed in an area with strong cell service. However, this option could quickly become cost prohibitive. An alternative option might be to accept the spotty cell service but have the hardware to buffer a few days’ worth of data. Once connection is available, that data can be uploaded to a data historian.

This alternative option would work well, as long as the products are connected to a reliable power supply. Implementing this solution on a battery-powered device would severely limit the run time between charges.

This is just one example that illustrates the complexities and case-by-case variables that impact each IoT deployment. However, there’s something else to be considered: devices connected to the cloud.

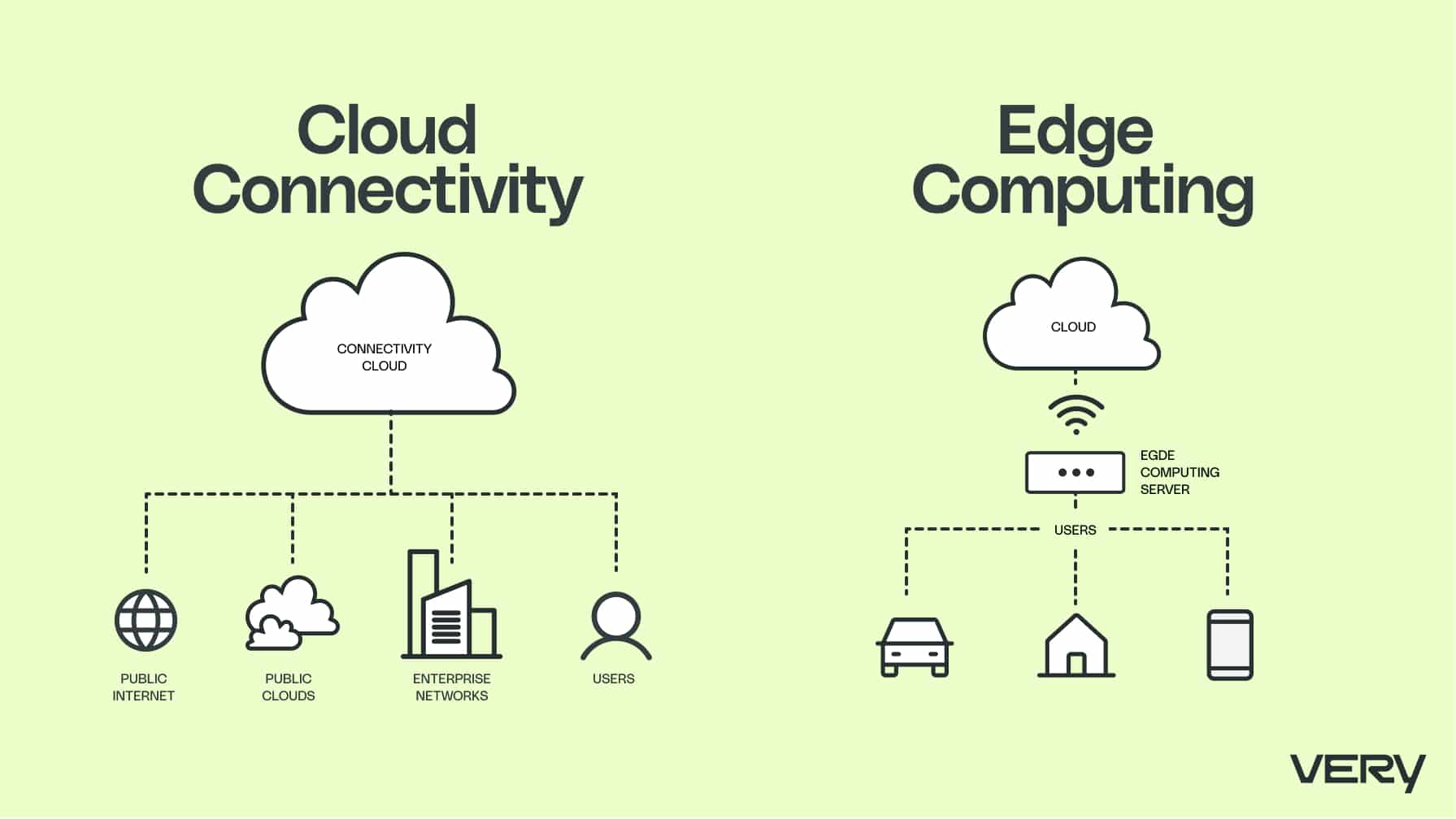

Cloud Connectivity and Edge Computing in IoT Development

Connecting a device to the cloud requires an evaluation of the full data pipeline and how users will interact with it. The ingestion pipeline that delivers data from devices to the cloud infrastructure should be robust, secure, and scalable. And once the data’s ingested, there’s full data storage, management, manipulation and access system developments to take care of.

But there’s a step before data ingestion begins that we find is often overlooked: processing and enriching the data on the edge before it reaches the cloud. Local computation allows the system to continue some level of operation, even if the network is unavailable, which reduces reaction times and can reduce the cost of data transfer to the cloud. This impacts the hardware and local network requirements, however, and too much local processing without asynchronous backup to the cloud also limits your visibility into your system. The edge versus cloud computation consideration should be carefully assessed for each IoT application.

IoT Myth 2: Getting data to the cloud is the hard part. Everything else is easy.

In a perfect world, this statement would be true. Unfortunately, we’ll have to debunk it. Even after data enters the cloud, organizing, processing, and gleaning valuable insights from that data is no walk in the park.

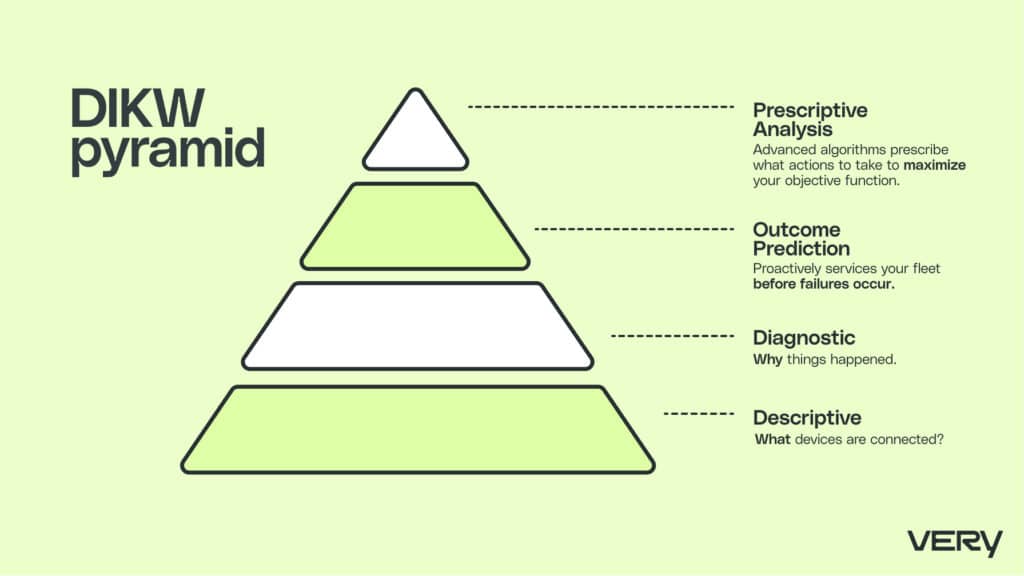

We can visualize this challenge by imagining a Data, Information, Knowledge, and Wisdom (DIKW) pyramid. Each of these building blocks is a step towards a higher level. Data sits at the base of the pyramid; wisdom is at the top.

Consider that this raw data at the bottom of the pyramid provides little value in isolation. To unlock its value, you’ll need to organize this data to describe exactly what events have occurred, like a change in temperature or a lapse in inter-device communication. You’ll also have to build the visualization system to present this information to users.

The next step involves adding context to this data to supply information and knowledge, the next two blocks on the pyramid. Your users should understand how and why certain events occurred. This information informs the wisdom at the top of the pyramid, where you can predict with some accuracy what will happen. At this point, you can prescribe the actions needed to achieve your desired outcomes, which brings us to the next application: AI and machine learning.

DIKW In Machine Learning & AI Applications

The same pyramid principle applies to machine learning and artificial intelligence applications.

The pyramid starts at the information level called descriptive. Here we can see what devices are connected, the associated telemetry and classic statistical analysis can be applied to unlock basic anomaly detection.

With the growth of the volume and variety of data, ad-hoc investigations and multi-factor analysis and visualizations, the analysis moves to the diagnostic phase that allows us to understand why things happened. We can visualize this as the information and knowledge portions of the pyramid. The next two phases arrive at the top of the data pyramid, which represents AI deployment delivering its highest value.

First, machine learning predicts a future outcome. This predictive maintenance allows you to proactively service your fleet before failures occur. Prediction also enables advanced anomaly detection by detecting deviations from expected performance. The final prescriptive analytics brings advanced algorithms such as reinforcement learning to prescribe what actions to take to maximize your objective function, such as reduced overall costs or other KPIs.

IoT Myth 3: As long as you have strong technical leaders in every domain, a program manager just needs to track the schedule and tasks.

Strong technical leaders are certainly a requirement for successful IoT projects, but program managers are there for much more than scheduling support. Program managers act as the coach on the metaphorical IoT development playing field; their guidance and leadership help engineers move through the field more efficiently.

At Very, our chief engineers, tech leads, and technical program managers steer every large project in tandem. Together, they ensure that our team pairs the best technical solutions to the client’s requirements. Playing on the strengths of each of these roles is what makes a project successful.

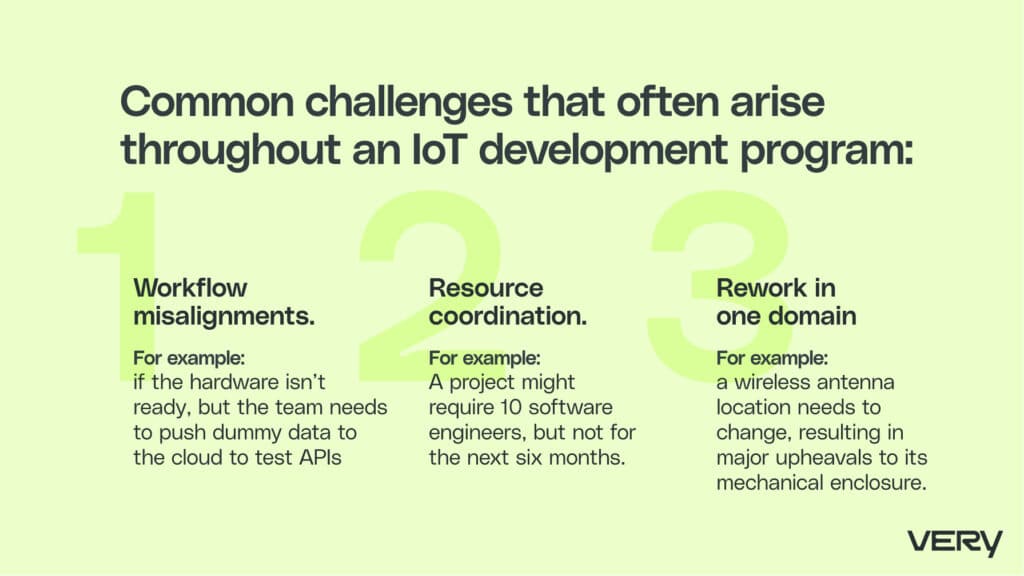

Program managers navigate cross-functional teams through common challenges that often arise throughout an IoT development program, such as:

- Workflow misalignments. Project managers create interfaces to unblock teams when their workflows aren’t aligned—for example, if the hardware isn’t ready, but the team needs to push dummy data to the cloud to test APIs. Or perhaps the API isn’t ready yet, but the team needs to test OTA updates on hardware. Program managers coordinate with teams to ensure projects can be completed as planned, even in the face of unforeseen hiccups.

- Resource coordination. Teams aren’t always needed at the same time. A project might require 10 software engineers, but not for the next six months. It’s the program manager’s job to ensure the project has resources available at the times they’re needed.

- Rework in one domain causing effects — for example, a wireless antenna location needs to change, resulting in major upheavals to its mechanical enclosure.

Program management is often overlooked — but without it, the engineering team is like an orchestra without a conductor. There may be some excellent work coming from individuals, but without guidance from the podium, the whole orchestra is a little off-beat.

IoT Myth 4: It takes years to discover if IoT will bring value to the table.

Enterprise leaders often ask themselves if IoT is worth the investment if it could take years to see returns. Understandably, pouring millions of dollars into a long-haul strategy can seem too risky of an investment.

However, we’ve found that constant iteration — and testing early and often — can rapidly bring the benefits of investing in IoT development to the surface. You don’t need to wait years to find out if IoT is right for your business. Whether you’re adding IoT components to an existing product or designing a new one from scratch, your teams will constantly research, test, and measure to benchmark your progress towards achieving your goals for the right value. Iterative testing helps to continuously refine a product strategy’s design and direction. And each stage of testing builds upon the last, eliminating the risk of needing dramatic pivots to recalibrate your designs.

Unlocking The Value of Design Discovery and Research

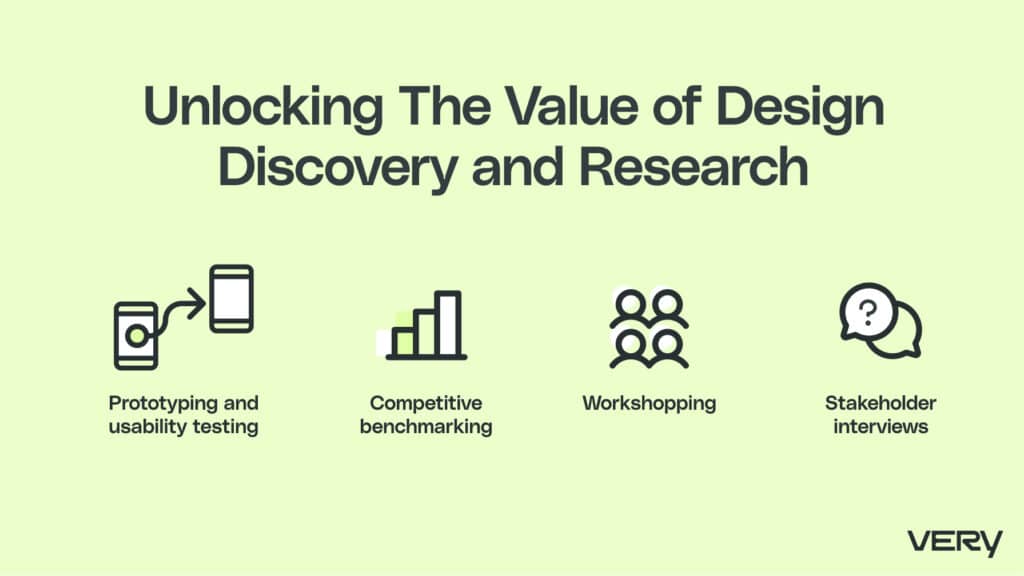

At Very, we believe value is not just something that’s achieved when you cross the finish line. We’ve discovered that value is unlocked throughout the entire design discovery and research phase through a variety of methods, including:

- Stakeholder interviews, which provide firsthand insight into the ideal value to be delivered to the business, stakeholders, and users of a product or service.

- Workshopping with clients to identify insightful and actionable items, rather than just what’s presentable. We like to define several potential paths forward, identify the pros and cons, and then make testable decisions.

- Competitive benchmarking to look at how other products are approaching similar problems. This can reveal pitfalls, efficiency gains, and helps in early stage decision-making. We typically see this take place at the start of a new project, but it’s possible to incorporate it again if outcomes need to change.

- Prototyping and usability testing. Design will often create early stage, interactive prototypes that are used to test how real users would interact with and gain value from the product. We leverage a handful of different methods to then put these prototypes in front of real users, and use this opportunity to get a balanced view at what’s actually useful.

Testing Software Early and Often

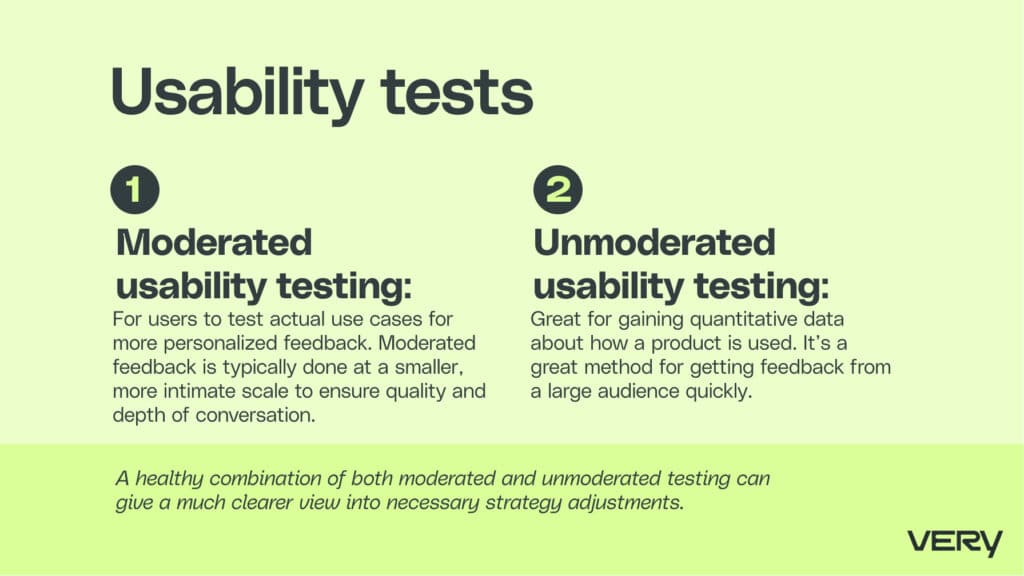

We use Figma as our initial prototyping and testing tool. Once we’ve finished our prototypes, we move on to one of two usability tests.

- Moderated usability testing allows for users to test actual use cases for more personalized feedback. Moderated feedback is typically done at a smaller, more intimate scale to ensure quality and depth of conversation.

- Unmoderated usability testing is great for gaining quantitative data about how a product is used.It’s a great method for getting feedback from a large audience quickly. A healthy combination of both moderated and unmoderated testing can give a much clearer view into necessary strategy adjustments.

Testing Hardware Early and Often

Hardware testing requires a different approach. Instead of developing injection molded plastics and PCBA builds right off the bat, hardware testing involves the proof of concept (POC) phase.

The goal of the POC phase is to build quick, safe, and functional hardware prototypes that represent the most valuable product for the given problem or market space. This allows the client to test the value proposition on a small scale. POCs aren’t entirely rudimentary, but they don’t need full tooling for the initial display. They also allow enterprises to mitigate their exposure financially in committing to a larger build, while also allowing them to collect valuable data if they need to raise capital for a larger market testing. Our scale and experience in this space allows us to quickly build most POC hardware in less than 16 weeks with functioning applications, firmware and backend to support the IoT ecosystem.

Final Thoughts

IoT development presents significant challenges, often compounded by prevalent myths and misconceptions. However, it is crucial to recognize that while complex, embarking on IoT projects is not unattainable. By debunking these myths, enterprises can gain clarity and confidence in pursuing IoT initiatives, recognizing their potential for innovation and growth. Embracing a nuanced understanding of IoT’s complexities is essential for harnessing its transformative power in today’s digital era.