BLOG

Machine Learning Algorithms: What is a Neural Network?

Machines have brains. This, we already know. But increasingly, their brains are able to solve the sorts of problems that, until recently, humans were uniquely good at. Neural networks, as the name suggests, are modeled on neurons in the brain. They use artificial intelligence to untangle and break down extremely complex relationships.

What sets neural networks apart from other machine-learning algorithms is that they make use of an architecture inspired by the neurons in the brain. “A brain neuron receives an input and based on that input, fires off an output that is used by another neuron. The neural network simulates this behavior in learning about collected data and then predicting outcomes,” Mark Stadtmueller, VP of product strategy at AI platform provider Lucd, explains to CMS Wire.

It’s fascinating, but before we go any deeper, let’s back up and look at neural networks in the context of artificial intelligence and machine learning.

Putting It In Context

Neural networks are one approach to machine learning, which is one application of AI. Let’s break it down.

- Artificial intelligence is the concept of machines being able to perform tasks that require seemingly human intelligence.

- Machine learning, as we’ve discussed before, is one application of artificial intelligence. It involves giving computers access to a trove of data and letting them search for optimal solutions. Machine learning algorithms are able to improve without being explicitly programmed. In other words, they are able to find patterns in the data and apply those patterns to new challenges in the future.

- Deep learning is a subset of machine learning, which uses neural networks with many layers. A deep neural network analyzes data with learned representations akin to the way a person would look at a problem. In traditional machine learning, the algorithm is given a set of relevant features to analyze, however, in deep learning, the algorithm is given raw data and derives the features itself.

Deep Neural Networks

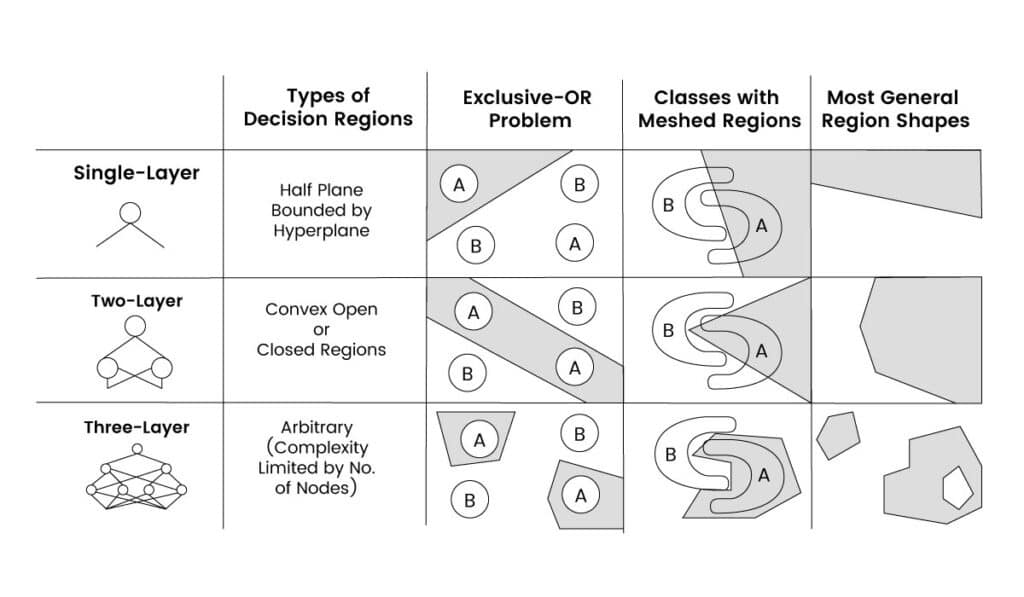

Neural networks can be created from at least three layers of neurons: The input layer, the hidden layer(s) and the output layer. The hidden layer – or layers – in between consist of many neurons, with connections between the layers. As the neural network “learns” the data, the weights, or strength, of the connections between these neurons are “fine-tuned,” allowing the network to come up with accurate predictions.

As we’ve discussed, neural network machine learning algorithms are modeled on the way the brain works – specifically, the way it represents information.

When a neural network has many layers, it’s called a deep neural network, and the process of training and using deep neural networks is called deep learning,

Deep neural networks generally refer to particularly complex neural networks. These have more layers ( as many as 1,000) and – typically – more neurons per layer. With more layers and more neurons, networks can handle increasingly complex tasks; but that means they take longer to train. Because GPUs are optimized for working with matrices and neural networks are based on linear algebra, the availability of powerful GPUs has made building deep neural networks feasible.

In a “classic” neural network, information is transmitted in a single direction through a network, where each layer is fully connected to its neighbors, from the input to the output layers. However, there are two other types of neural networks that are particularly well-suited for certain problems: convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

Convolutional Neural Networks

Convolutional neural networks (CNNs) are frequently used for the tasks of image recognition and classification.

For example, suppose that you have a set of photographs and you want to determine whether a cat is present in each image. CNNs process images from the ground up. Neurons that are located earlier in the network are responsible for examining small windows of pixels and detecting simple, small features such as edges and corners. These outputs are then fed into neurons in the intermediate layers, which look for larger features such as whiskers, noses, and ears. This second set of outputs is used to make a final judgment about whether the image contains a cat.

CNNs are so revolutionary because they take the task of localized feature extraction out of the hands of human beings. Prior to using CNNs, researchers would often have to manually decide which characteristics of the image were most important for detecting a cat. However, neural networks can build up these feature representations automatically, determining for themselves which parts of the image are the most meaningful.

Recurrent Neural Networks

Whereas CNNs are well-suited for working with image data, recurrent neural networks (RNNs) are a strong choice for building up sequential representations of data over time: tasks such as handwriting recognition and voice recognition.

Just as you can’t detect a cat looking at a single pixel, you can’t recognize text or speech looking at a single letter or syllable. To comprehend natural language, you need to understand not only the current letter or syllable but also its context.

RNNs are capable of “remembering” the network’s past outputs and using these results as inputs to later computations. By including loops as part of the network model, information from previous steps can persist over time, helping the network make smarter decisions. Long-short term memory (LSTM) units or gated recurrent units (GRUs) can be added to an RNN to allow it to remember important details and forget the irrelevant ones.

A Historical Context

All of this may feel contemporary, but it goes back decades. Big data consultant Bernard Marr and Import.io, among many others, have compiled the history of neural networks and deep learning. Here’s a short overview:

- In 1943, Walter Pitts, a logician, and Warren McCulloch, a neuroscientist, created the model of a neural network. They proposed a combination of mathematics and algorithms that aimed to mirror human thought processes. The model — dubbed the McCulloch-Pitts neurons — has, of course, evolved, but it remains standard.

- In 1980, Kunihiko Fukushima proposed the Neoconitron, a hierarchical, multilayered, artificial neural network used for handwriting recognition and other pattern-recognition tasks.

- In the mid-2000s, the term “deep learning” gained traction after papers by Geoffrey Hinton, Ruslan Salakhutdinov, and others showed how neural networks could be pre-trained a layer at a time.

- In 2015, Facebook implemented DeepFace to automatically tag and identify users in photographs.

- In 2016, Google’s AlphaGo program beat a top-ranked international Go player.

Games, especially strategy games, have been a great way to test and/or demonstrate the power of neural networks. But there are numerous, more practical, applications.

Real-World Applications

“For companies looking to predict user patterns or how investments will grow, the ability to mobilize artificial intelligence can save labor and protect investments. For consumers trying to understand the world around them, AI can reveal patterns of human behavior and help to restructure our choices,” Terence Mills, CEO of AI.io and Moonshot, writes in a piece for Forbes.

Artificial Intelligence applications are wide-ranging. Some of the most exciting are in healthcare:

- Research from 2016 suggests that systems with neural network machine learning may aid decision-making in orthodontic treatment planning.

- A research paper published in 2012 discusses brain-tumor diagnosis systems based on neural networks.

- PAPNET, developed in the 1990s, is still being used to identify cervical cancer. From the 1994 research paper: “The PAPNET Cytological Screening System uses neural networks to automatically analyze conventional smears by locating and recognizing potentially abnormal cells.”

Many interesting business applications use deep neural networks for image classification: A neural network can identify and discern relationships among images, placing each in an appropriate category. The business implications are tremendous, Mills says in his Forbes column. “You could have software crawl through a given network looking for instances of your products. You could figure out if a celebrity has been wearing a piece of jewelry that you created. You could even see if your cafe has been featured in any shots of a show made in your neighborhood.”

If you’ve watched a few too many dystopian science fiction movies, you may be starting to worry. You probably don’t need to. As Marr explains,

“The promise of deep learning is not that computers will start to think like humans. That’s a bit like asking an apple to become an orange. Rather, it demonstrates that given a large enough data set, fast enough processors, and a sophisticated enough algorithm, computers can begin to accomplish tasks that used to be completely left in the realm of human perception — like recognizing cat videos on the web (and other, perhaps more useful purposes).”

If you want to learn more about the theory behind neural networks, this series of videos provides a fantastic overview. Coursera also offers a series of lectures via YouTube, and a simple Google search will yield dozens of other videos and courses.

As for us? We’re excited by the possibilities — and relieved that HAL isn’t one of them.

Speak with a team member today to learn more about how machine learning can improve your next data science project.